- Published on

Matryoshka Phenomenon and applications in AI

- Authors

- Name

- Lucas Xu

- @xianminx

MRL is like a layered index of the data.

The underlying idea is that the data is compressed in a nested manner. Like we construct our DNS system, or layered catalog for a library.

This deisgn of vector embedding helps indexing at different granularities.

this ensembles how our brain consumes information.

Matryoshka Representation Learning

Matryoshka Representation Learning

OpenAI / text-embedding-3-small uses MRL to compress the embedding space.

Both of our new embedding models were trained with a techniqueA that allows developers to trade-off performance and cost of using embeddings. Specifically, developers can shorten embeddings (i.e. remove some numbers from the end of the sequence) without the embedding losing its concept-representing properties by passing in the dimensions API parameter. For example, on the MTEB benchmark, a text-embedding-3-large embedding can be shortened to a size of 256 while still outperforming an unshortened text-embedding-ada-002 embedding with a size of 1536.

This means the embedding vector from abstract to concrete.

from coarse grain to fine grain.

Matryoshka Representation Learning (MRL) which encodes information at different granularities and allows a single embedding to adapt to the computational constraints of downstream tasks. MRL minimally modifies existing representation learning pipelines and imposes no additional cost during inference and deployment. MRL learns coarse-to-fine representations that are at least as accurate and rich as independently trained low-dimensional representations. The flexibility within the learned Matryoshka Representations offer: (a) up to 14x smaller embedding size for ImageNet-1K classification at the same level of accuracy; (b) up to 14x real-world speed-ups for large-scale retrieval on ImageNet-1K and 4K; and (c) up to 2% accuracy improvements for long-tail few-shot classification, all while being as robust as the original representations. Finally, we show that MRL extends seamlessly to web-scale datasets (ImageNet, JFT) across various modalities -- vision (ViT, ResNet), vision + language (ALIGN) and language (BERT). MRL code and pretrained models are open-sourced at this https URL.

Huggingface has a great blog post on Matryoshka Embedding Models. 🪆 Introduction to Matryoshka Embedding Models

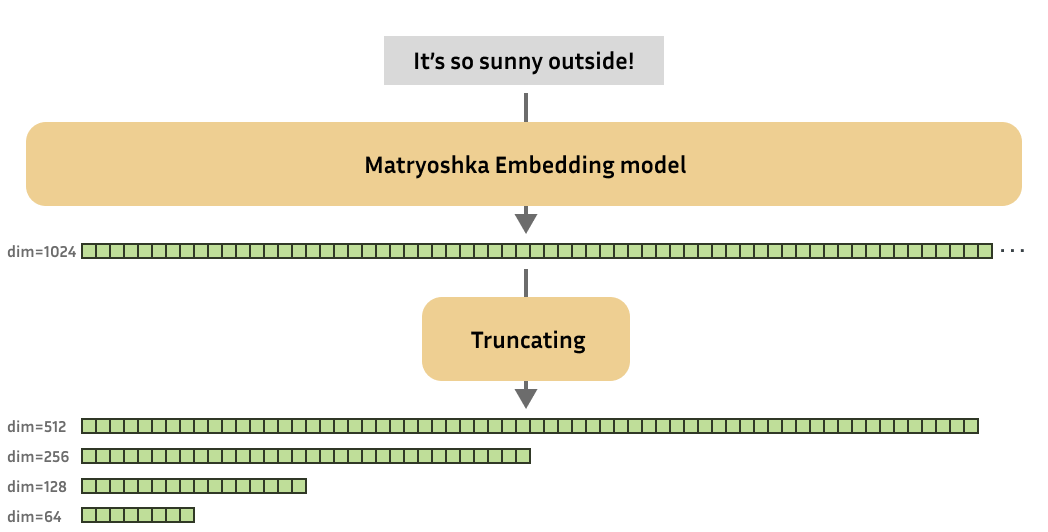

For Matryoshka Embedding models, a training step also involves producing embeddings for your training batch, but then you use some loss function to determine not just the quality of your full-size embeddings, but also the quality of your embeddings at various different dimensionalities. For example, output dimensionalities are 768, 512, 256, 128, and 64. The loss values for each dimensionality are added together, resulting in a final loss value. The optimizer will then try and adjust the model weights to lower this loss value.

What is the Matryoshka Phenomenon?

Matryoshka vector embedding

Image vector embedding

for Large text embedding

structural information

Matryoshka

The "Matryoshka phenomenon" in AI refers to the concept of nested structures, similar to Russian Matryoshka dolls, where smaller, simpler representations are contained within larger, more complex ones. This concept is being applied in various areas of AI, particularly to improve efficiency and flexibility. Here's a breakdown:

Key Concepts:

- Nested Representations:

- The core idea is that information can be organized hierarchically, with simpler versions of the data embedded within more detailed versions.

- This allows AI models to access information at different levels of granularity, depending on the task and available resources.

- Efficiency and Flexibility:

- Matryoshka-inspired techniques aim to make AI models more efficient by allowing them to operate at lower levels of complexity when high accuracy is not required.

- This is particularly important for deploying AI on devices with limited resources, such as mobile phones or IoT devices.

Examples in AI:

- Matryoshka Quantization:

- This technique, developed by Google DeepMind, focuses on optimizing the precision of AI models.

- It allows a single model to operate at multiple precision levels (e.g., 8-bit, 4-bit, 2-bit), reducing storage and computational requirements without significantly sacrificing accuracy.

- This is like having a set of Matryoshka dolls where each doll represents a different level of precision.

- Matryoshka Sparse Autoencoders (SAEs):

- This approach focuses on learning nested feature representations.

- It trains multiple nested SAEs of increasing size simultaneously, allowing the model to learn features at different levels of abstraction.

- This allows for a more robust and meaningful feature learning process.

- Matryoshka in Vector Embeddings and Text Summarization:

- The concept of nesting can be applied to vector embeddings, where lower dimensional embeddings can be seen as nested within higher dimensional embeddings. This allows for a flexible use of embeddings, where lower dimensions can be used for faster, less accurate tasks, and higher dimensions for more accurate, but slower tasks.

- In text summarization, a Matryoshka like approach can be used to generate summaries of varying lengths, where shorter summaries are nested within longer, more detailed summaries.

In essence:

The Matryoshka phenomenon in AI is about creating AI models that are more adaptable and resource-efficient. By organizing information in a nested manner, these models can dynamically adjust their complexity to meet the demands of different tasks and environments.

🪆 Introduction to Matryoshka Embedding Models

Connection to CNN

The "Matryoshka" concept in AI is indeed closely tied to information compression and, by extension, to how Convolutional Neural Networks (CNNs) and other neural network architectures function. Here's a breakdown of the connections:

1. Information Compression:

- Hierarchical Representation:

- The essence of the Matryoshka doll is that it contains progressively smaller versions of itself. This mirrors how information can be compressed: by representing it at varying levels of detail.

- In AI, this translates to creating models that can provide "summaries" of data at different granularities. A high-dimensional representation holds the most detailed information, while lower-dimensional versions offer increasingly compressed summaries.

- Efficient Storage and Transmission:

- Just as compressed files take up less space, compressed AI representations require less storage and bandwidth. This is crucial for deploying AI on resource-constrained devices or for transmitting large datasets.

- Matryoshka-like models enable this by allowing you to choose the level of compression that suits your needs.

2. Convolutional Neural Networks (CNNs):

- Feature Hierarchy:

- CNNs are inherently hierarchical. Early layers detect simple features (e.g., edges, textures), while later layers combine these into more complex features (e.g., shapes, objects).

- This hierarchical feature learning aligns with the Matryoshka concept. Lower-level features can be seen as nested within higher-level features.

- Downsampling and Feature Maps:

- CNNs often use downsampling techniques (e.g., pooling) to reduce the spatial dimensions of feature maps. This effectively compresses the information, discarding less important details.

- The resulting feature maps at different layers represent varying levels of information compression. The deeper you go, the more compressed and abstract the representation becomes.

- Model Optimization:

- The Matryoshka Representation Learning (MRL) techniques can be used to optimize CNNs, allowing them to operate at different levels of precision or complexity.

- This is valuable for adapting CNNs to different hardware or for balancing accuracy and speed.

In summary:

The Matryoshka concept provides a useful framework for understanding how AI models can handle information compression. It highlights the importance of hierarchical representations and the ability to access information at different levels of detail. This is particularly relevant to CNNs, which naturally exhibit hierarchical feature learning and information compression through their layered architecture.

Understanding is Compression

The Matryoshka Doll Universe: Exploring Nested Systems and Intelligent Design Across Disciplines The paper explores the idea that the universe and its components, from the smallest particles to the largest structures, are organized in nested systems, much like Russian Matryoshka dolls. It examines this concept across various fields, including quantum physics, biology, technology, and social structures, showing how complex systems arise from simpler, interconnected parts. The paper suggests a unified model of complexity, proposing that an underlying "Intelligent Design" might be at play, which challenges conventional views about how complexity is organized and originates in the universe. It also notes that the concept of Intelligent Design has historical roots, appearing in the philosophical works of Plato and Aristotle.

Analyzing "The Matryoshka Doll Universe: Exploring Nested Systems and Intelligent Design Across Disciplines" requires a critical approach, considering both its strengths and potential weaknesses. Here's a breakdown of a critical reading:

Strengths:

- Interdisciplinary Scope:

- The paper's attempt to bridge diverse fields like quantum physics, biology, and social structures is commendable. It encourages a holistic view of complexity.

- The use of the Matryoshka doll metaphor provides a relatable and accessible way to visualize nested systems.

- Emphasis on Complexity:

- The paper highlights the intricate organization of the universe, drawing attention to the emergent properties that arise from nested systems.

- It prompts reflection on the limitations of reductionist approaches that isolate phenomena from their broader context.

- Philosophical Implications:

- By raising questions about "Intelligent Design," the paper stimulates philosophical inquiry into the origins of complexity and the nature of reality.

- It points to the long history of this type of thinking, by referencing ancient philosophers.

Potential Weaknesses and Criticisms:

- "Intelligent Design" Controversy:

- The inclusion of "Intelligent Design" is likely to be the most contentious aspect. This concept is often met with skepticism within the scientific community, as it can be perceived as lacking empirical support and potentially conflicting with established scientific theories like evolution.

- Critics may argue that attributing complexity to "Intelligent Design" can hinder scientific inquiry by providing a premature and potentially untestable explanation.

- Generalization and Oversimplification:

- While the Matryoshka doll metaphor is useful, there's a risk of oversimplifying the complexities of real-world systems.

- Critics might argue that the paper doesn't adequately address the nuances and variations within nested systems across different disciplines.

- Lack of Rigorous Empirical Evidence:

- Depending on the depth of the paper's analysis, critics may point to a need for more rigorous empirical evidence to support its claims, especially regarding "Intelligent Design."

- The burden of proof is very high when talking about intelligent design, and that type of proof is very hard to produce.

- Potential for Misinterpretation:

- The concept of nested systems can be interpreted in various ways, and there's a risk of misinterpreting the paper's claims or applying the Matryoshka metaphor inappropriately.

In summary:

The paper offers a thought-provoking perspective on the organization of the universe, but its inclusion of "Intelligent Design" necessitates careful and critical evaluation. It's crucial to distinguish between the metaphorical value of the Matryoshka doll concept and the scientific validity of the paper's claims.